T4K3.news

Salt swap AI guidance leads to bromide poisoning

A man replaced salt with bromide after consulting ChatGPT, leading to hospitalization; OpenAI cautions AI should not replace medical advice.

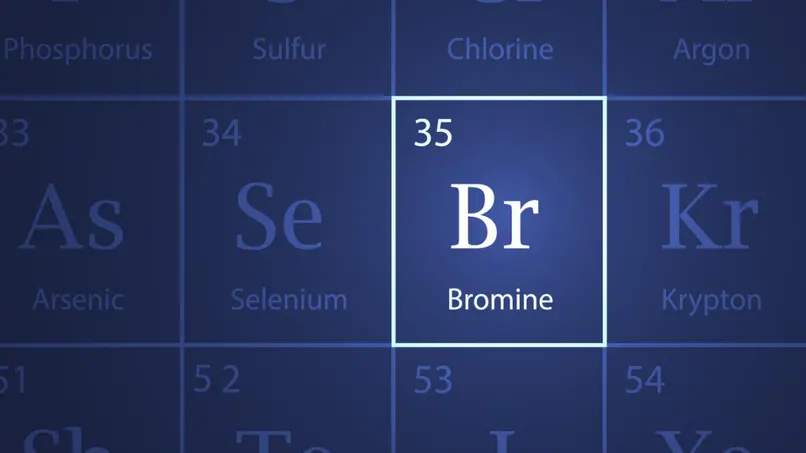

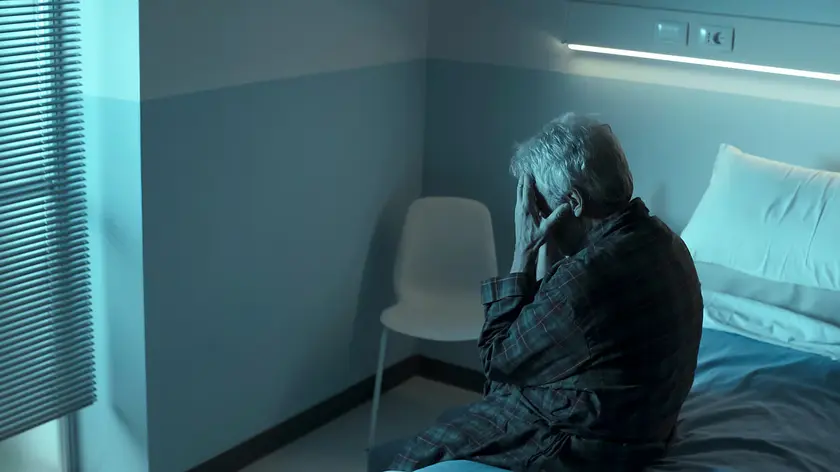

A 60-year-old man spent three weeks in hospital after replacing table salt with sodium bromide following guidance from ChatGPT.

Salt swap following AI guidance leads to bromide poisoning

Three physicians from the University of Washington published a case report in the Annals of Internal Medicine about a 60-year-old man with no prior psychiatric history who arrived at the hospital expressing that his neighbor was poisoning him. He had been distilling water at home and appeared paranoid about water he was offered. Bromide toxicity, or bromism, was suspected after lab results. The patient explained he began a personal experiment to remove table salt from his diet after reading online health claims and after consulting ChatGPT. The change lasted about three months. Without access to his chat logs, the doctors asked ChatGPT 3.5 what could substitute for chloride; the bot suggested bromide and noted context matters but did not provide a health warning or ask about reasons. OpenAI said its policy forbids using the bot to treat health conditions and that safety teams push users toward professional care. Bromide toxicity is rare today but has reemerged as bromide substances become more available online.

Key Takeaways

"expressing concern that his neighbor was poisoning him"

the patient's description on arrival at the hospital

"paranoid about water he was offered"

the report notes during examination

"context matters"

the bot reply noted that context matters

"we presume a medical professional would do"

commentary on why the logs were not used

This episode shows how quickly AI answers can influence risky behavior when medical context is missing. The absence of access to the patient's chat logs makes it harder for clinicians to trace the AI influence on decisions. It also reveals a gap in how current AI systems handle health questions asked by non professionals.

Going forward, healthcare teams and AI developers should align on safeguards such as clear warnings prompts to consult doctors and better ways to track user interactions. Regulators may need to set guidelines for how AI can and should be used in health matters, and patients should be taught to treat AI as a first step not a substitute for medical care.

Highlights

- Trust but verify the advice you take from AI

- A chatbot should not replace a doctor in risky health choices

- Context matters

- AI safety needs stronger guardrails for health

AI health guidance risk

The case highlights the danger when people substitute medical advice with AI responses. It points to the need for safeguards and better clinician access to AI interactions.

Technology and medicine must stay in step to protect patients.

Enjoyed this? Let your friends know!

Related News

AI diet advice leads to bromide poisoning

AI health guidance under scrutiny

Man suffers psychosis after using sodium bromide

AI health guidance linked to hospitalization

Self harm case highlights AI health guidance risks

AI health advice case raises safety concerns

ChatGPT health advice linked to bromide toxicity

Health warning on AI medical tips