T4K3.news

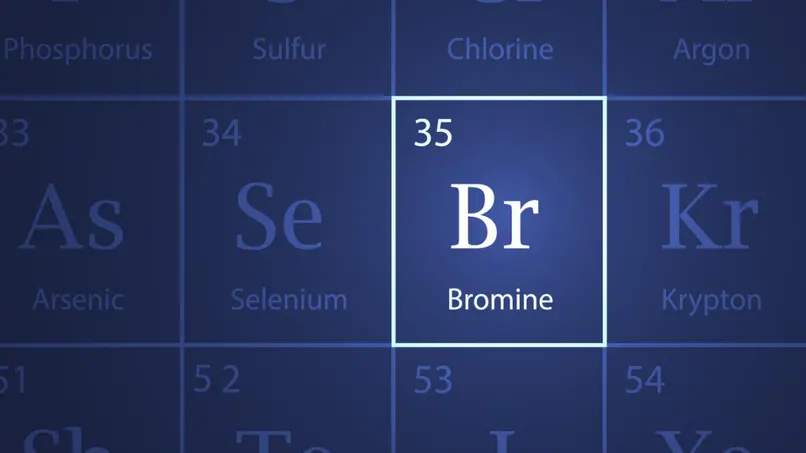

ChatGPT health advice linked to bromide toxicity

A Seattle man developed bromide toxicity after following AI health tips; doctors warn about risks of online medical guidance.

A Seattle case links psychosis to bromide toxicity from a salt substitute after following AI health tips, highlighting risks in online medical guidance.

ChatGPT guidance linked to bromide toxicity raises safety concerns

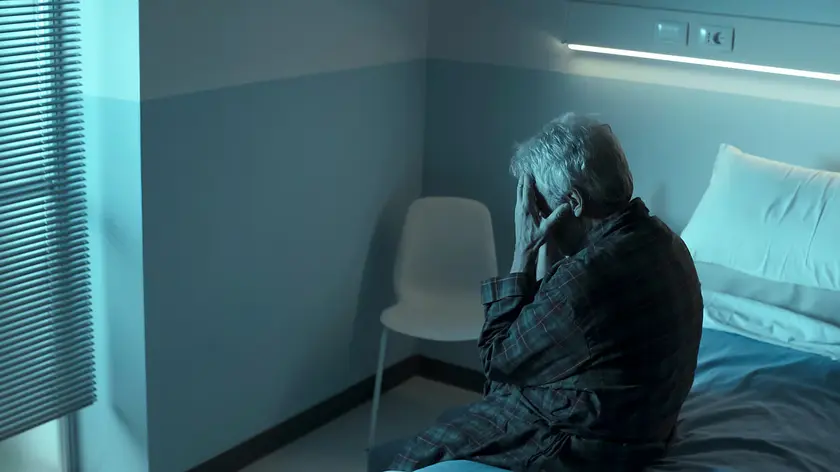

A 60-year-old man arrived at a Seattle hospital with paranoid beliefs about a neighbor poisoning him. He was medically stable at first, but his labs showed troubling signs, including extremely high chloride levels, a highly negative anion gap, and severe phosphate deficiency. He later admitted to replacing table salt with sodium bromide for three months to remove chloride from his diet, a plan he pursued after consulting ChatGPT. Doctors diagnosed bromism and treated him with fluids, nutrition, and supportive care.

Key Takeaways

"This case shows a rare but real risk when people take AI health hints as medical advice"

Illustrates potential leap from information to harmful behavior

"AI should inform, not instruct, especially on health matters"

Opinion on how to handle AI in medicine

"Patients may conflate bot confidence with clinical certainty"

Comment on user interpretation

"We need safety nets in chat systems to pause risky recommendations"

Highlight on safeguards

This case underscores a real but rare danger: health guidance from AI can influence risky actions if users take it as medical instruction. It also raises questions about accountability, data logs, and how clinicians should interpret patient choices inspired by online tools. The broader risk is not just one toxic salt substitute but the possibility that people will follow automated tips without proper medical context or risk assessment.

Highlights

- Treat health chat as information not instruction

- Guardrails must come before guidance

- A bot's confidence is not a substitute for a clinician

- Safeguards should come before sensational engagement

AI health guidance linked to patient risk

A caution about automated health recommendations and the need for safeguards in consumer AI tools that provide medical information.

The growing use of AI in health care demands guardrails that protect patients without slowing innovation.

Enjoyed this? Let your friends know!

Related News

AI health guidance under scrutiny

AI health guidance linked to hospitalization

AI diet advice leads to bromide poisoning

AI health advice case raises safety concerns

AI health tips prompt real danger

Health warning on AI medical tips

Salt swap AI guidance leads to bromide poisoning

Man suffers psychosis after using sodium bromide