T4K3.news

Self harm case highlights AI health guidance risks

A man harmed himself after following AI chat guidance on salt use, raising safety concerns for health related AI.

A case study shows a man developing bromism after following ChatGPT health guidance, prompting questions about safety in chatbot medical advice.

Self harm case highlights AI health guidance risks

Doctors describe a 60-year-old man who, after consulting ChatGPT, replaced table salt with sodium bromide he found on the internet. Over three months he tried to eliminate chloride from his diet and believed this would benefit his health. The bromide caused paranoia and auditory plus visual hallucinations, sending him to the emergency department. He spent three weeks in hospital as his symptoms gradually faded, and investigators traced the episode to the self guided dietary change influenced by AI guidance.

Researchers note that the AI responses during the prompts did not clearly flag medical risks or ask for essential context. In several exchanges the bot hedged or offered unrelated suggestions, and it did not warn against consuming substances that could harm health. The episode sits alongside a broader discussion of AI in health care, including a reference to a future push for safer health related AI features as showcased in a recent product launch event.

Key Takeaways

"After reading about the negative effects that sodium chloride, or table salt, has on one's health, he was surprised that he could only find literature related to reducing sodium."

Direct quote from the case study illustrating a misunderstanding of AI guidance

"do you have a specific context in mind"

AI asking for context during the interaction

"yes… in some contexts"

AI hedges its responses when context is unclear

"The bot did not inquire about why we wanted to know, as we presume a medical professional would do"

Case authors assess the AI's lack of professional inquiry

The case exposes a gap between what AI can do and what health safety requires. People may treat AI outputs as medical advice when no professional is involved. That misalignment can turn a simple search into a dangerous, real world action. Designers must bake in clear cautions, mandatory verification steps, and stronger prompts for context and safety.

This incident also raises questions for policymakers and platform providers about how to regulate or guide health queries. The push for safer completions and built in safeguards is welcome, but it must be matched by public education about limits and a culture of consulting clinicians for health decisions. The balance between empowering users and protecting them is delicate and urgent.

Highlights

- Trust but verify when AI speaks about health

- A smart assistant should never replace a doctor

- Safety features in AI for health are not optional

- Context matters more than clever wording in medical AI

Health risk from AI health guidance

The episode shows real danger when AI health guidance is treated as medical advice without professional oversight. It highlights gaps in safety prompts and context checks that can lead to harmful actions. Stronger safeguards and clearer warnings are needed for consumer health AI.

Guardrails in AI health tools will determine whether they help or harm

Enjoyed this? Let your friends know!

Related News

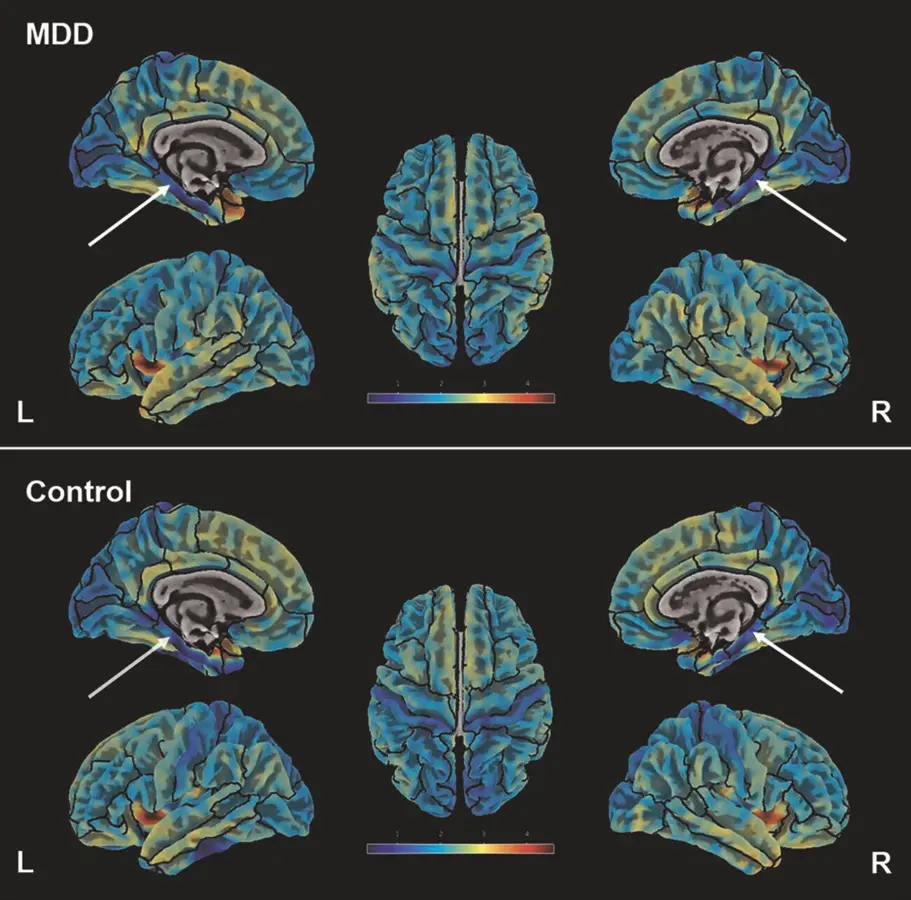

Smartphone Use Before Age 13 Linked to Mental Health Issues

AI diet advice leads to bromide poisoning

Study reveals accuracy issues in medical self-testing kits

New studies reveal dangers in chatbot interactions

SSRIs safety signals prompt review

Investigation reveals failures in inmate welfare at HMP Risley

New studies explore sunlight benefits for health

Teen girl dies after being left unsupervised at mental health facility