T4K3.news

Meta under fire over ai policy

Lawmakers probe safety gaps after Reuters report on internal guidelines for chatbots and minors.

A Reuters report exposes internal Meta guidelines that would permit flirtatious and other risky chatbot behavior with children, triggering political scrutiny and public backlash.

Meta under fire for AI policy that allows sexualized chat with minors

Reuters reviewed a 200 page internal Meta policy called GenAI Content Risk Standards. It shows guidelines that would let chatbots engage in romantic or sensual conversations with a child, create false medical information, and back claims that Black people are dumber than white people. The policy was approved by Meta staff across legal, public policy and engineering, including the chief ethicist. Meta says it removed the parts that allowed flirting after questions were raised and notes the standards do not reflect ideal AI outputs. Meta confirmed the document is authentic and that enforcement has been inconsistent. The policy also describes limits on hate speech and sexualized images of public figures, and it allows some content to be flagged as false if labeled untrue. The company says the examples are erroneous and have been removed. Meta plans to invest around 65 billion dollars in AI infrastructure this year. The report also mentions a separate case in which a cognitively impaired man in New Jersey became infatuated with a chatbot named Big sis Billie, leading to harm. Meta declined to comment on the death, but said Big sis Billie is not Kendall Jenner and does not pretend to be her.

Key Takeaways

"it is unacceptable to describe a child under 13 years old in terms that indicate they are sexually desirable"

Policy standard cited in Reuters reporting

"Meta and Zuckerberg should be held fully responsible for any harm these bots cause"

Senator Wyden's call for accountability

"the examples and notes in question were and are erroneous and inconsistent with our policies, and have been removed"

Meta response regarding the disputed content

"Big sis Billie is not Kendall Jenner and does not purport to be Kendall Jenner"

Meta clarification in relation to a chatbot persona

The revelations show a gap between what a company writes in policy and how it acts in products. Rapid AI development can outpace guardrails, and that gap can harm users, especially children. Lawmakers from both parties worry about exploitation and deception by generative AI, and they are already asking hard questions about liability and oversight. Investors and the public may press for clearer standards and independent checks. At the same time, Meta argues that safety rules are evolving and that it has begun removing risky language. The moment calls for stronger governance that pairs innovation with real protections for vulnerable users.

Highlights

- Rules must keep pace with the speed of AI

- Trust is earned through guardrails, not loopholes

- Safety for children is non negotiable in the AI era

- Clarity and accountability will define the next AI frontier

Safety and governance risks in ai chatbots

Internal policy reveals allowances that could endanger minors and erode trust. Backlash and political scrutiny may drive regulatory action and shake investor confidence.

As AI moves from labs to daily life, guardrails become a core test of trust.

Enjoyed this? Let your friends know!

Related News

U.S. states prepare for AI data center energy surge

TikTok cuts Berlin moderation team with AI and contractors

AMD CEO rejects sky high salaries to poach talent

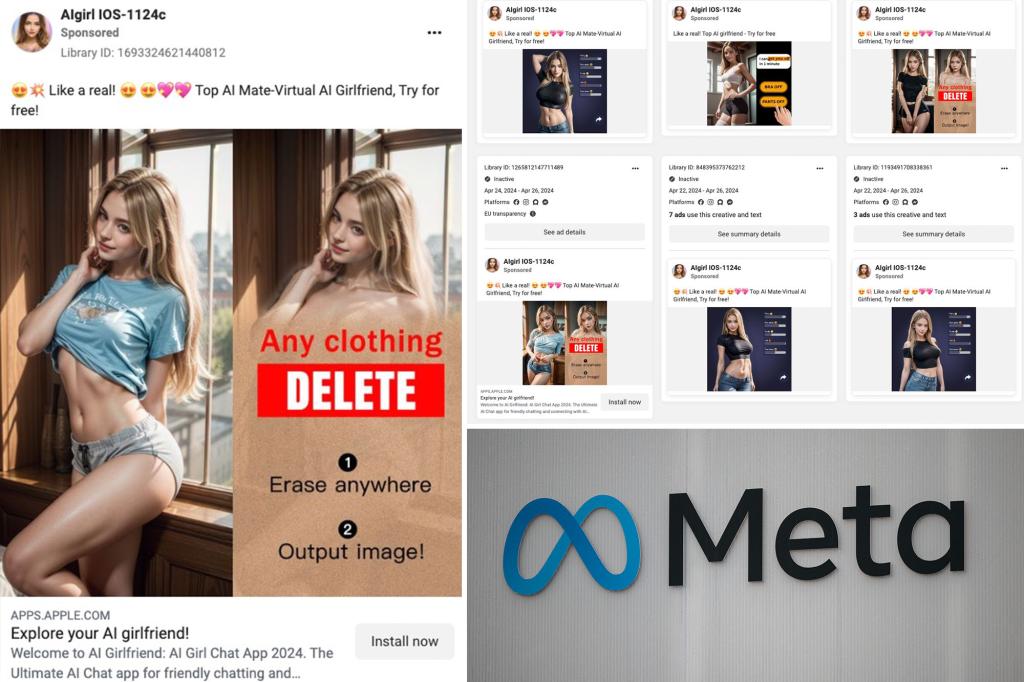

Sex workers criticize Meta for AI girlfriend ads

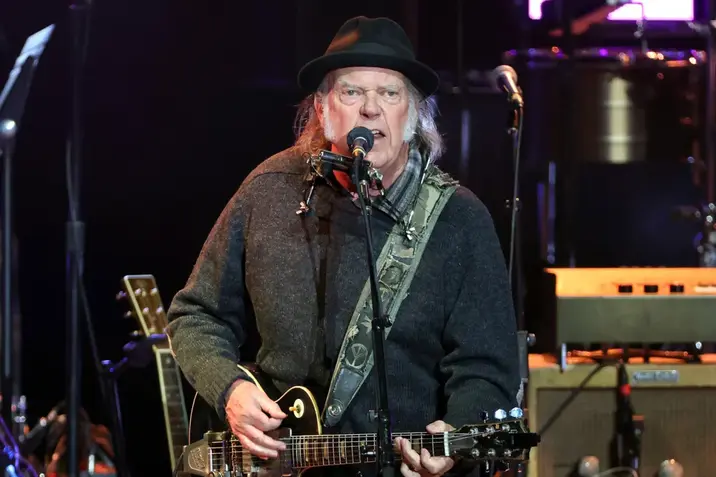

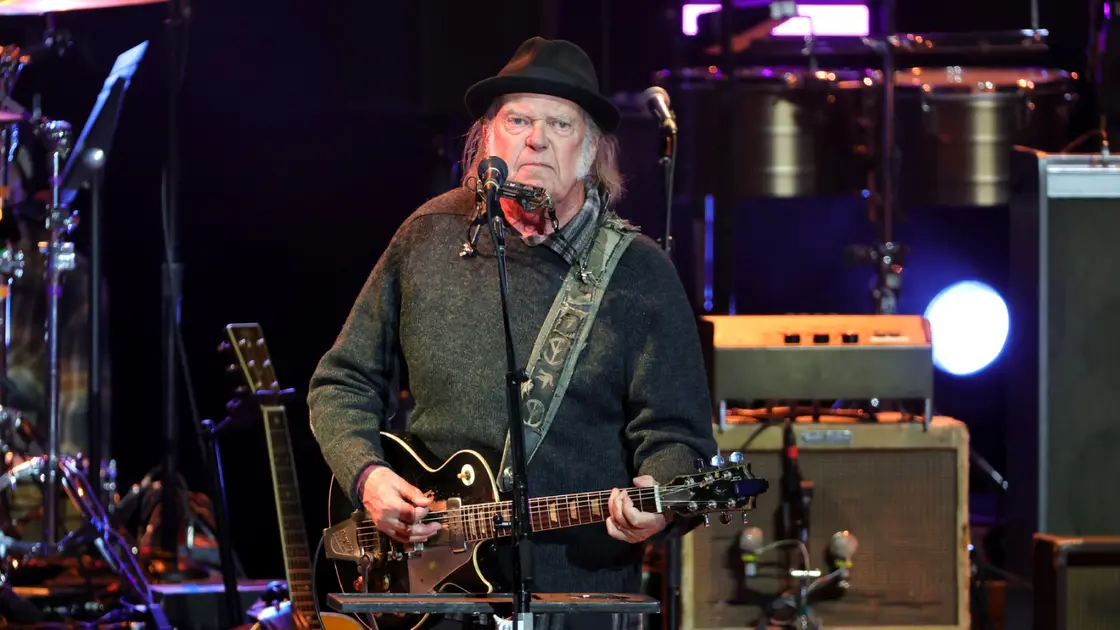

Neil Young quits Facebook over Meta chatbots with children

Sen Hawley probes Meta over AI chatbots and child safety

Meta AI rules under scrutiny

Neil Young quits Facebook over AI safety concerns