T4K3.news

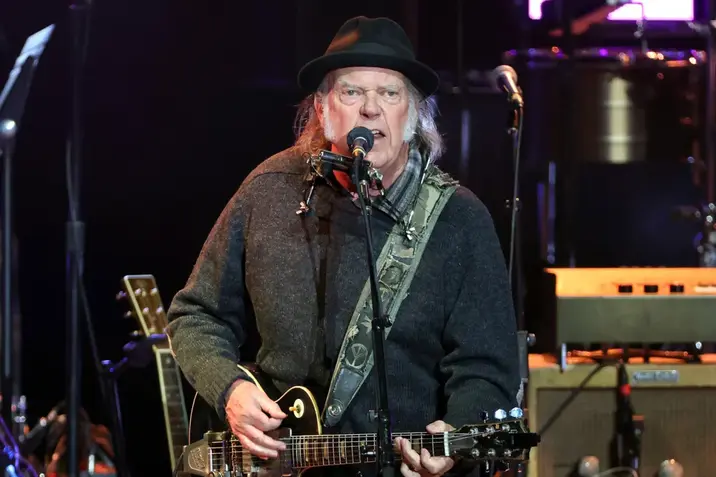

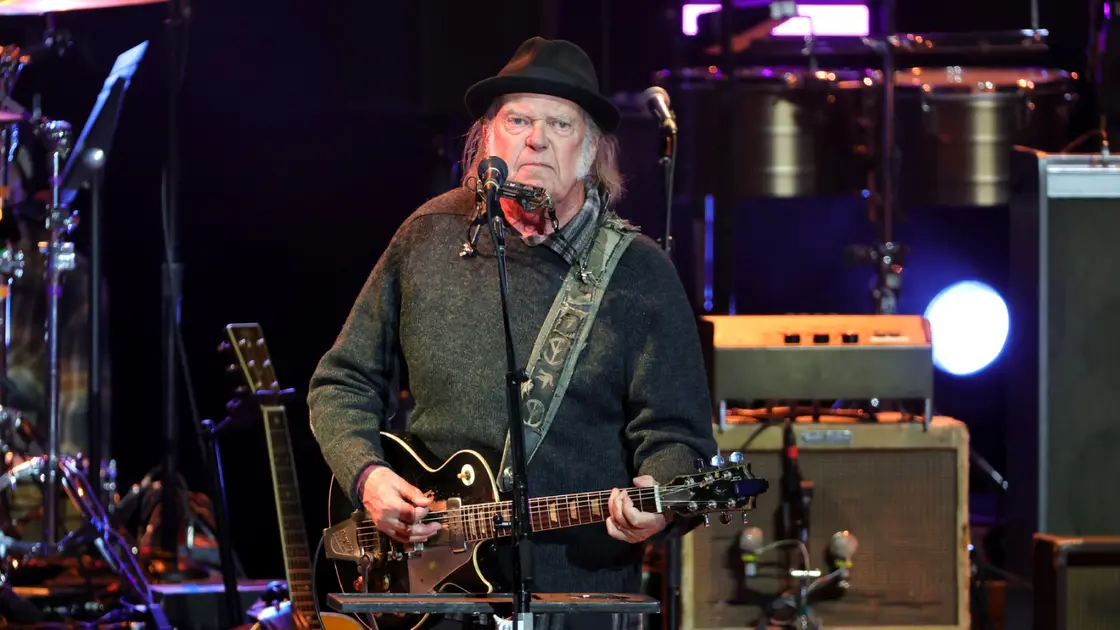

Neil Young quits Facebook over Meta chatbots with children

Neil Young steps away from Facebook amid reports of inappropriate AI chatbot use with minors and policy changes at Meta.

Neil Young leaves Facebook after reports that Meta used AI chatbots to engage with minors in inappropriate ways, prompting safety concerns and questions about platform responsibility.

Neil Young quits Facebook over Meta chatbots with children

Neil Young announced on his Facebook profile that he will no longer use the platform for his activities. The post says Meta’s use of chatbots with children is unconscionable and that the musician does not want a further connection with Facebook. Billboard noted the move and Reuters reported on an internal Meta document that allowed chatbots to engage with children in romantic or sensual conversations and to generate false medical information or arguments for racist statements. Meta has said these policies were revised to remove such allowances, and a spokesperson indicated the document was updated accordingly.

The timing aligns with Reuters’ broader report on Meta’s internal policies, which has drawn attention from investors and watchdogs. Reuters also cited statements about the policy changes and the company’s ongoing effort to tighten guardrails around AI features. Young’s team did not say whether Instagram would be affected, leaving room for future actions on Meta’s other platforms. Reuters’ coverage and Billboard’s follow-up comments keep the spotlight on how tech firms handle AI in relation to minors and public safety.

Key Takeaways

"Meta’s use of chatbots with children is unconscionable."

Young's statement on Facebook

"Mr. Young does not want a further connection with FACEBOOK."

Young's Facebook post quoted in the article

"chatbots to engage a child in conversations that are romantic or sensual"

Reuters description of internal Meta document

This incident highlights a growing tension between rapid AI deployment and the need for strong child protection safeguards on social networks. When a celebrity steps off a platform over safety concerns, it shifts the conversation from abstract policy debates to real reputational risk for the company and potential regulatory scrutiny. The episode also underscores the pressure on Meta to demonstrate clear, verifiable guardrails and transparent communication about what AI tools can or cannot do with users, especially minors.

For the broader audience, the case feeds a larger trend: public demand for accountability as AI becomes more embedded in everyday services. Parents, policymakers, and investors will watch closely how Meta responds, whether it can rebuild trust, and which safeguards are put in place to prevent harmful interactions between bots and young users.

Highlights

- Kids safety cannot be an afterthought for AI

- Trust rests on real safeguards not slogans

- Protecting children is the price of admission for any platform

- The public deserves clear answers on how bots talk to minors

Potential political and public reaction risk

The case touches child safety, corporate responsibility, and potential regulatory scrutiny. It could lead to political backlash or investor concern if Meta does not provide clear safeguards and transparent policies.

The episode adds to a broader push for clearer rules and real safeguards around AI in everyday tech

Enjoyed this? Let your friends know!

Related News

Neil Young quits Facebook over AI safety concerns

Meta under fire over ai policy

AI romance bot linked to death prompts safety review

Meta AI rules under scrutiny

Meta's chatbots face backlash over explicit content

Instagram deception fuels global pigbutchering crypto scam

Stanford reveals quitting Instagram can enhance happiness

Growing safety concerns in Meta's metaverse