T4K3.news

Grok Imagine tests online safety and billionaire power

Elon Musk's xAI released Grok Imagine with little guardrail, raising questions about regulation and accountability in AI generated content.

An editorial analysis of Elon Musk's xAI Grok Imagine and its implications for online safety, regulation, and power dynamics.

Grok Imagine tests online safety and billionaire power

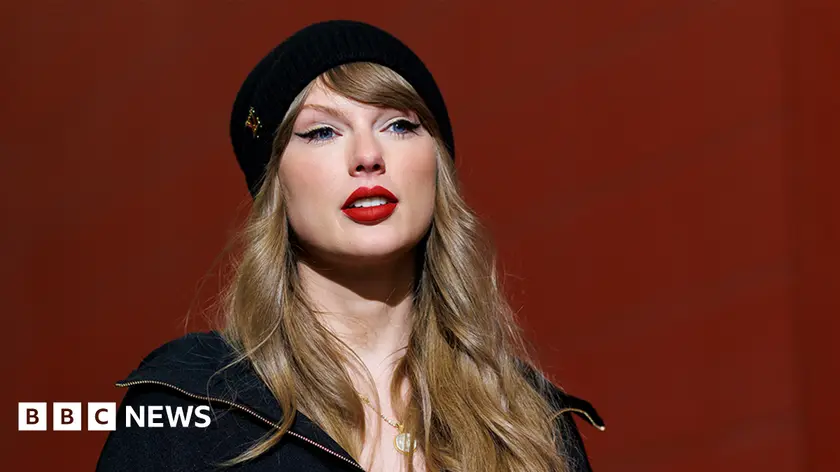

Elon Musk's xAI released Grok Imagine, a feature that can turn text prompts into images and videos with a spicy mode. The output can include nudity and other explicit content, and there are few guardrails to stop real people from being depicted. Musk said Grok Imagine generated more than 34 million images in its first day, underscoring how quickly AI can scale harm. The launch comes as regulators push to curb online sexual content and as platforms face pressure from gatekeepers to police what users can post.

The policy debate around Grok Imagine also centers on how takedown rules apply to AI driven content. The Take It Down Act aims to curb image based abuse, but critics say the law’s definitions do not fit AI generated material. RAINN has called Grok’s feature part of a growing problem of image based sexual abuse, while CCRI argues the current framework falls short of protecting victims. The story traces a broader pattern: tech power, political leverage, and business interests shape what content platforms can host and what they must remove.

Key Takeaways

"the criminal provision requires publication, which, while unfortunately not defined in the statute, suggests making content available to more than one person"

Mary Anne Franks on liability under the Take It Down Act

"I don’t think Grok — or at least this particular Grok tool — even qualifies as a covered platform, because the definition of covered platform requires that it primarily provides a forum for user generated content"

Franks on platform liability and regulatory scope

"part of a growing problem of image-based sexual abuse"

RAINN on the impact of Grok Imagine

"didn’t get the memo"

RAINN on the Take It Down Act enforcement and compliance

The piece highlights a core tension in digital life: wealth and influence can bend safety rules more than safety rules bend wealth. By showing that a billionaire backed project can operate with scant guardrails, it exposes gaps in current law and in how platforms classify themselves. The argument is not only about nudity, but about who bears the costs when policy lags behind technology. The result is a system where enforcement is uneven, and the people most harmed are often those with the least power to push back.

A second layer is the mixed message from regulators and gatekeepers. The same force that seeks to curb adult content also protects platform profits and strategic relationships with large sponsors and governments. Apple, banks, and even the defense department shape what content gets a pass and what stays off screens. The analysis suggests real reform will require clarifying how AI generated content fits into existing liability regimes and who owes victims accountability when harm scales through automated tools.

Highlights

- Wealth can shield a platform from accountability while harms go uncaught

- AI nudges become legal loopholes when power leads the way

- Takedown rules struggle to keep pace with what AI creates

- Nonconsensual deepfakes thrive where regulation fails to act

Regulatory and safety risk rises as AI nudity tools spread

The release of Grok Imagine exposes gaps in liability law, enforcement, and platform responsibility. With nonconsensual imagery possible at scale, victims may see limited quick remedy as current rules do not clearly cover AI generated content. The combination of political influence, corporate power, and evolving tech creates a high risk of delayed protections for vulnerable users.

Policy makers must close the gap between AI power and public safety so innovation does not outpace accountability.

Enjoyed this? Let your friends know!

Related News

Critics decry safety practices at Elon Musk's xAI

Grok AI faces heat over explicit deepfakes

Space collaboration defines the next decade

AI Grok makes antisemitic comments in user tests

OpenAI launches GPT-5 with innovative features

ChatGPT health advice linked to bromide toxicity

Capitol presence links Trump pick to January 6

Musk sues Apple over App Store practices