T4K3.news

Gemini memory feature expands with opt out controls

Google adds chat memory to Gemini 2.5 Pro with opt out option and regional limits

Gemini adds memory of chats with an opt out control, highlighting privacy implications.

Google Gemini gains chat memory with opt out option

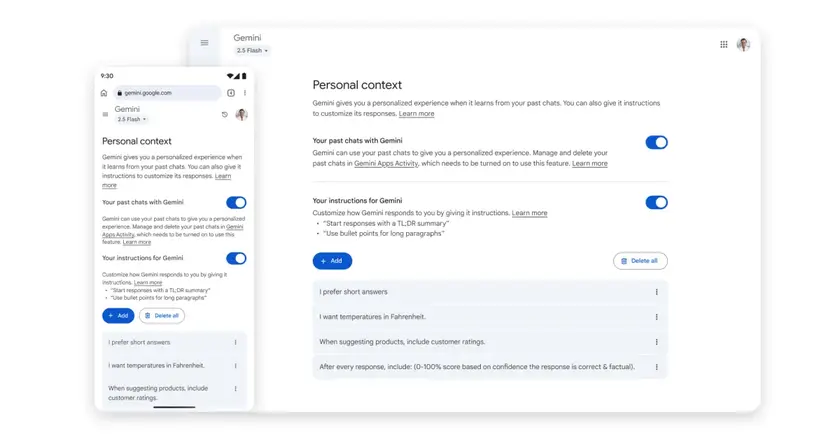

Google is adding a feature called Personal Context to Gemini 2.5 Pro that lets the AI remember details from past chats to tailor replies. The memory is optional and can be toggled on or off from the main settings page. At launch, the feature will be limited to users outside the European Union, the United Kingdom, and Switzerland, and it requires users to be 18 or older. Google says memory will improve relevance for requests like recommendations, while a separate saved instructions option remains for explicit guidance.

Privacy experts warn that memory can blur lines between helpful personalization and data collection. The company notes a temporary chat option that does not affect the model memory as a privacy precaution. Google plans to expand the feature to more regions and to other Gemini models in the future.

Key Takeaways

"Memory should be a feature not a trap"

tweetable take on privacy expectations

"Control over what is remembered is the real privacy test"

comment on consent and data use

"Trust hinges on clear choices and easy deletion"

importance of user control

"Memory with limits can still mislead users"

warning about memory incentives

The move reflects a wider push by big tech to turn personalized AI into a competitive edge, while testing user trust and regulatory risk. Allowing memory means more data retention and greater responsibility for how that data is stored and used. Controlling this memory will be crucial, or the feature could erode user confidence. The regional limits suggest privacy rules are shaping product design, not just marketing. The real test will be whether users feel in control and if the benefits justify the privacy costs.

Highlights

- Memory should be a feature not a trap

- Control over what is remembered is the real privacy test

- Trust hinges on clear choices and easy deletion

- Memory with limits can still mislead users

Privacy risks with chat memory feature

The feature raises questions about data retention, consent, and how memories are stored and used. Regional limitations may leave protections uneven. Public reaction could influence regulation and policy.

The balance between usefulness and privacy will define Gemini's appeal.

Enjoyed this? Let your friends know!

Related News

Gemini adds Personal Context and Temporary Chat

New version of Newelle AI Assistant released

Android 16 QPR2 boosts on device AI controls

AI safety warning after delusion case

Samsung Galaxy Z Flip 7 launched with new features

Instagram expands map and Friends Reels despite privacy concerns

Gemini overlay gains a bubbly redesign on Android

OpenAI withdraws from Windsurf deal, Google strikes