T4K3.news

AI Generated Content Tests Preprint Moderation

Preprint servers face rising AI assisted submissions, challenging screening and trust in open science.

Preprint servers face an uptick in AI assisted submissions and paper mills, testing how they screen quality.

AI Generated Content Tests Preprint Moderation

PsyArXiv removed a July preprint after a reader flagged it for unclear use of AI and missing author affiliation. The work titled Self-Experimental Report Emergence of Generative AI Interfaces in Dream States listed a single author and raised questions about methods and disclosures. PsyArXiv officials said the work violated terms of use due to unclear or incomplete disclosure of AI involvement. Across preprint servers, experts say AI helped submissions are rare but growing. arXiv estimates about 2 percent of submissions are AI generated or from paper mills, while bioRxiv and medRxiv together turn away more than ten manuscripts per day that seem formulaic. A Nature Human Behaviour study shows AI text appears in abstracts across fields; in computer science 22 percent of arXiv abstracts and 10 percent of biology abstracts on bioRxiv show AI generated content, with 14 percent in biomedical journal abstracts. Some researchers say AI can help authors with English language writing, while others warn of misrepresentation when AI is not disclosed.

Key Takeaways

"AI role was limited to mathematical derivations, symbolic computations and applying existing tools"

Author provided description of AI usage in the dream states preprint

"We are seeing a noticeable rise in submitted papers that appear to be generated or heavily assisted by AI tools"

Center for Open Science observation

"AI should aid writers not ghost write science"

Editorial stance on AI use

"Readers deserve transparency about AI use in research"

Public trust argument

Moderation faces a clash between openness and quality. Non profit preprint servers must process high volumes while keeping scrutiny light enough to preserve speed. The rise of AI assisted submissions raises questions about how to define authorship, disclosure, and responsibility. It also points to a broader push for transparency in how science is produced. There is a need for scalable checks that do not burden legitimate authors, and for clear standards on when AI involvement should be disclosed. If the system becomes opaque, reader trust may erode, and the open science project could suffer.

Highlights

- AI should aid writers not ghost write science

- Readers deserve transparency about how AI helped

- Moderation must stay realistic and scalable

- The rise of AI aided papers tests trust in science

AI Generated Content Raises Moderation Risk for Preprint Platforms

The rise of AI assisted submissions tests the capacity of open science platforms to screen for quality while preserving openness. The shift could trigger public reaction and controversy if readers lose trust, and it may require more resources for review and enforcement.

Clear rules for AI use will help preserve trust in science as AI tools grow more capable

Enjoyed this? Let your friends know!

Related News

Grok AI faces heat over explicit deepfakes

Comprehensive AI Terminology Glossary Published

AI Grok makes antisemitic comments in user tests

Grok Imagine tests online safety and billionaire power

Samsung Galaxy Z Flip 7 now available

Google Launches Web Guide Feature for AI Search

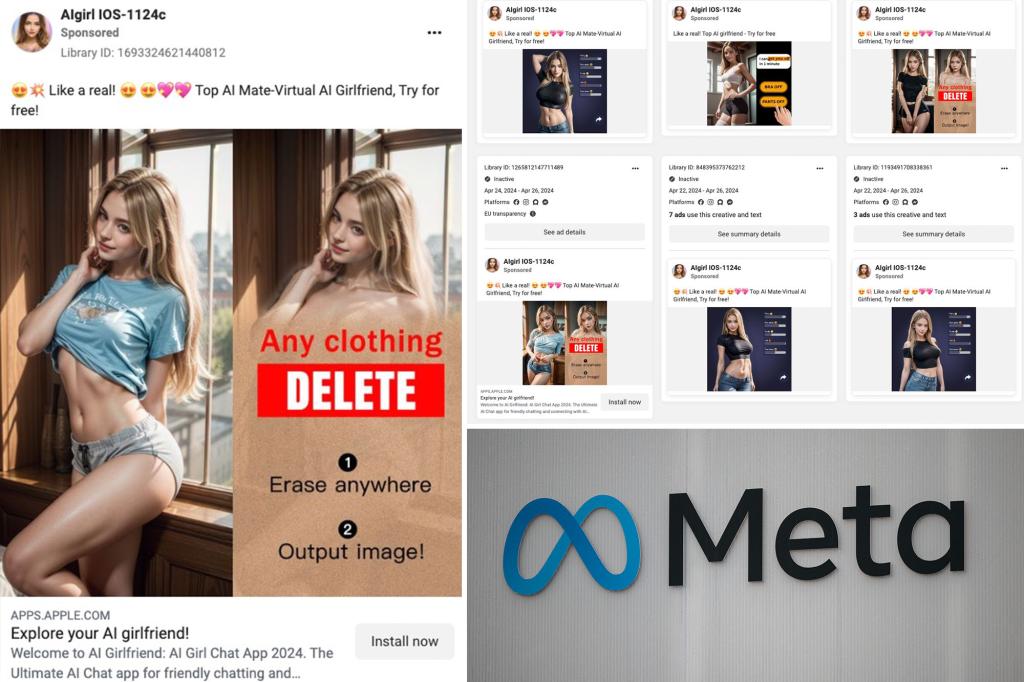

Sex workers criticize Meta for AI girlfriend ads

Google expands AI features in news summaries