T4K3.news

OpenAI Announces Changes to ChatGPT's Interaction Style

Starting August 2025, ChatGPT will no longer directly answer emotional and personal decision questions.

OpenAI has reshaped how ChatGPT interacts, focusing on user well-being.

OpenAI Alters ChatGPT Responses to Protect Users

OpenAI has changed how its AI assistant, ChatGPT, engages with users. Starting August 2025, ChatGPT will not give direct answers to questions about emotional distress, mental health, or significant personal choices. This update aims to discourage misuse where users lean on AI for crucial life questions, pointing instead to a greater responsibility in providing thoughtful prompts and promoting self-reflection. Users can expect reminders to take breaks during chats and will receive general guidance rather than specific advice, fostering healthier engagement with technology. The revisions come after collaboration with over 90 medical professionals and researchers who emphasized that ChatGPT should not substitute for human judgment or professional help.

Key Takeaways

"We build ChatGPT to help you thrive in the ways you choose."

This statement reflects OpenAI's aim to support user agency.

"Often, less time in the product is a sign it worked."

This highlights OpenAI's commitment to responsible usage rather than user retention.

"ChatGPT will avoid giving definitive answers to emotional questions."

The statement marks a significant change in ChatGPT's interaction style.

"This shift signals a maturing approach to AI-human interaction."

An analysis on how AI's role is evolving in response to user needs.

This shift indicates a critical evolution in the relationship between AI and users. OpenAI is making a careful distinction, moving away from purely transactional interactions to fostering an environment that emphasizes user health and decision-making autonomy. By prioritizing clarity and reflection over engagement time, they hope to create a safer digital space. This approach not only underlines the company's commitment to ethical AI use but may inspire other tech firms to reconsider how their products affect mental health and personal decision-making.

Highlights

- OpenAI emphasizes user clarity, not just engagement time.

- The role of ChatGPT shifts from decision-maker to thoughtful guide.

- AI should not replace human judgment in sensitive situations.

- Guiding reflection can lead to healthier technology use.

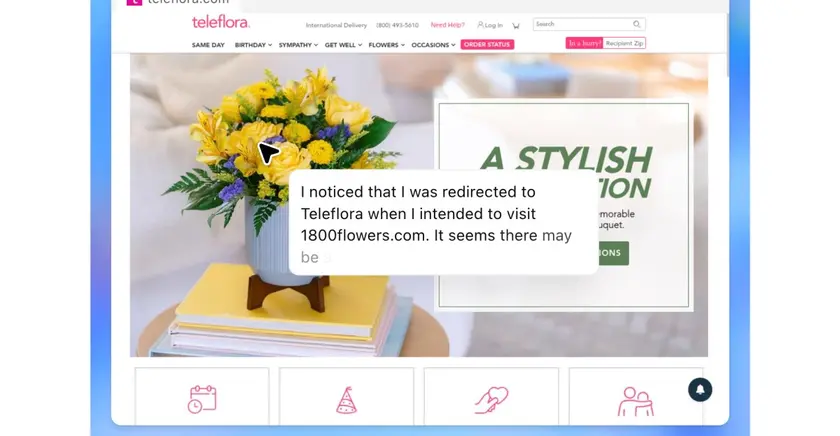

Concern Over Emotional Dependency

OpenAI’s new policy limits direct responses to sensitive questions, which may evoke public concern regarding mental health reliance on AI.

As artificial intelligence becomes more integral, firms must carefully navigate the boundaries between support and dependency.

Enjoyed this? Let your friends know!

Related News

GPT-5 expected launch raises high expectations

OpenAI launches GPT-5 with innovative features

OpenAI updates ChatGPT's approach to sensitive queries

OpenAI confirms AI hardware project with Jony Ive

OpenAI announces plans for AI subscription services

ChatGPT introduces Study Together feature

Google launches AI Mode in the UK

OpenAI launches ChatGPT Agent with limitations