T4K3.news

Ollama introduces Windows app for local LLMs

The new Ollama app simplifies interaction with local AI models on Windows.

Ollama's new app simplifies the process of running local LLMs on Windows PCs.

Ollama launches user-friendly Windows app for local LLMs

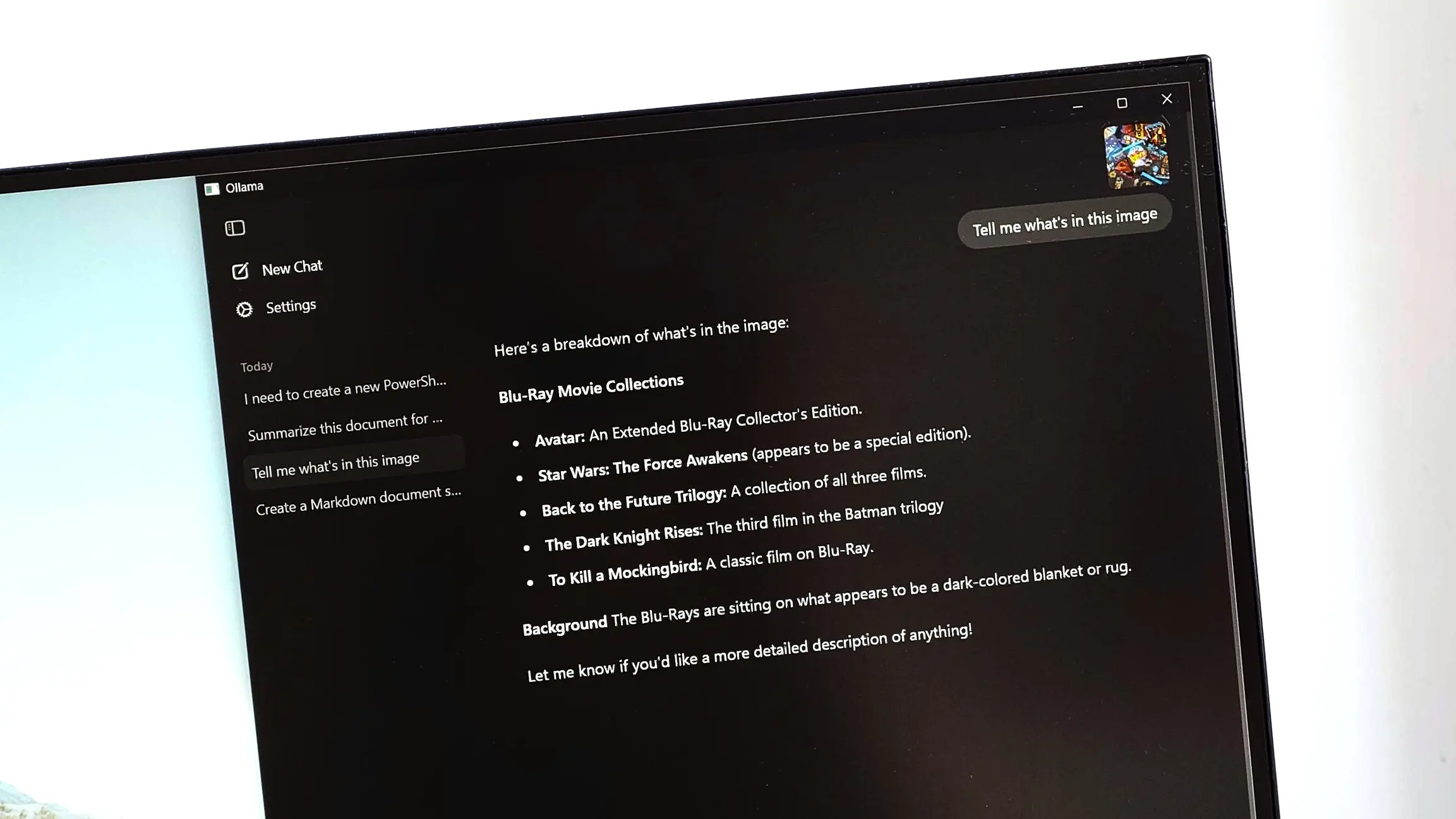

Ollama has released a Windows application designed to make the interaction with local language learning models easier for users. Previously, users had to rely on command line interfaces, but the new object-oriented app simplifies the experience. Users can download and install the software from the Ollama website, where it runs in the background or launches easily from the Start Menu. This new graphical user interface allows for straightforward access to different models through a dropdown menu, enhancing user accessibility.

While many models can be dragged and dropped into the app for ease of use, some advanced features still require command line inputs. Users must be informed that performance depends heavily on their computer specifications, with recommended hardware including Intel 11th Gen CPUs or Zen 4 AMD CPUs and adequate RAM. Overall, the app offers functionalities like interpreting images and code files but may still require terminal usage for more advanced operations. This launch represents a significant development in making machine learning more accessible.

Key Takeaways

"A user-friendly GUI makes local LLMs accessible to everyone."

This illustrates the shift toward user accessibility in AI technology.

"While many will still rely on the CLI for advanced functions, the app simplifies everyday use."

Acknowledges that while the app is beneficial, it does not replace all functionalities of the command line.

The new Ollama application addresses a key barrier for many potential users: ease of access. Language models have previously been relegated to tech-savvy individuals comfortable with command line interfaces. By providing a graphical user interface, Ollama opens doors for a wider audience to experiment with language models without needing extensive technical knowledge. However, the limitations on certain functionalities might still maintain a divide among heavy users and novices. The balance between ease of use and technical capability is crucial as the demand for these models continues to rise.

Highlights

- Ollama has made interacting with AI easier for everyone.

- A user-friendly interface is a game changer for local LLMs.

- No command line, no problem—Ollama's new app is here.

- This app could change how we think about local AI models.

Potential risks of hardware limitations

Users need to meet specific hardware requirements to effectively utilize Ollama, which may limit accessibility for some users.

The impact of this app could reshape how everyday users engage with AI technology.

Enjoyed this? Let your friends know!

Related News

Geekom IT15 shows Linux power in tiny desk PC

Arm gaming gains local install support

New version of Newelle AI Assistant released

Microsoft releases Windows 11 24H2 update with new features

New tech products set to enhance your daily routine

New Windows 11 builds launched with enhanced features

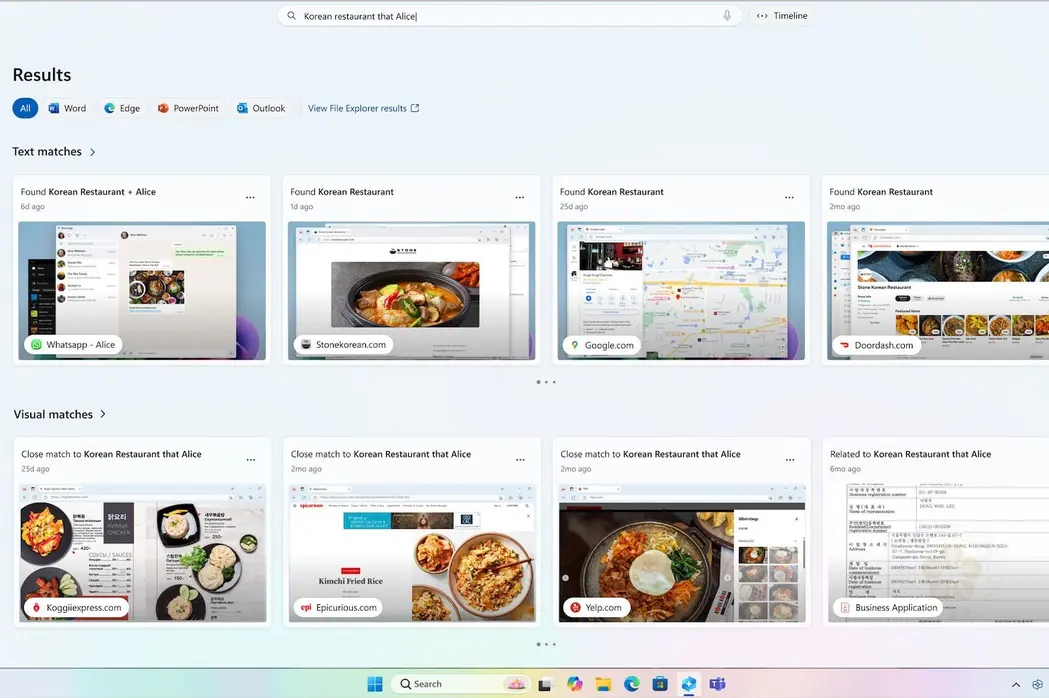

Tech Companies Block Microsoft's Recall Feature

Samsung replaces classic DeX mode with new version in One UI 8