T4K3.news

AI generated papers spark debate on originality

Scholars question how to credit ideas when AI writes parts of research and may overlap with prior work.

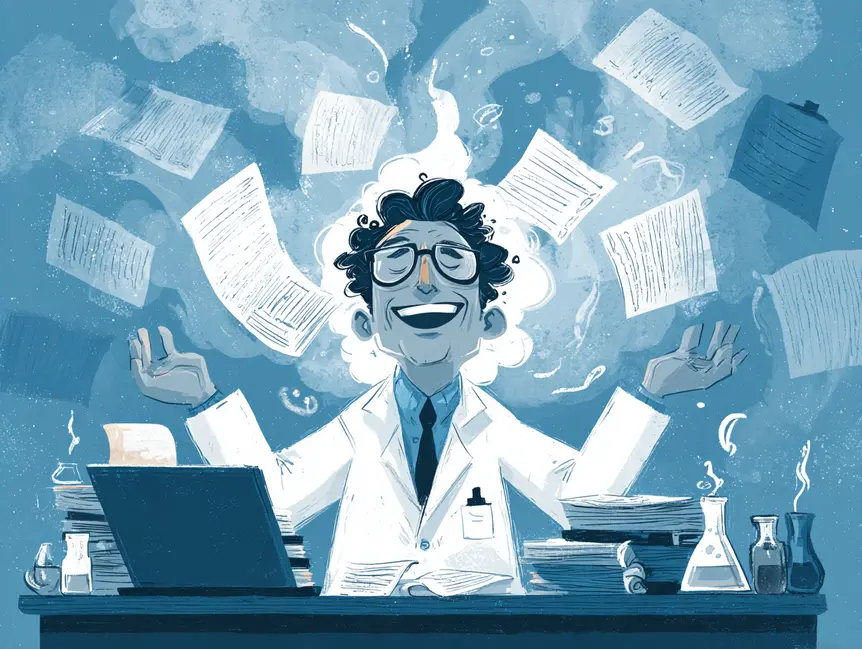

The debate over AI created research questions how originality and attribution are defined when machines generate ideas.

AI generated papers push boundaries in science

In January, Byeongjun Park, a KAIST researcher, received notice from two colleagues in India alleging that an AI generated manuscript used methods from his paper without credit. The manuscript appeared online as part of The AI Scientist project, a fully automated research tool announced in 2024 by Sakana AI in Tokyo. The system uses a large language model to generate ideas, run code, and draft a paper, and it clearly labels outputs as AI-generated.

Gupta and Pruthi of the Indian Institute of Science Bengaluru report several AI generated manuscripts showing overlaps with existing work, even when text is not copied. They note that some papers have won awards, such as an outstanding paper at a July ACL conference in Vienna, which has intensified the debate about whether these works meet traditional standards of originality. The team behind The AI Scientist rejects the plagiarism claims, arguing that overlaps can be methodological rather than a theft of ideas. Independent experts differ on where to draw the line, highlighting how hard it is to set universal rules for idea originality in AI driven research.

Key Takeaways

"A significant portion of LLM-generated research ideas appear novel on the surface but are actually skillfully plagiarized in ways that make their originality difficult to verify."

Gupta and Pruthi on overlaps in AI generated work

"The plagiarism claims are false"

The AI Scientist team replying to Gupta and Pruthi

"There’s no one way to prove idea plagiarism"

Weber-Wulff on how to define plagiarism in AI context

"These two papers are related, so, while it is an everyday occurrence by humans not to include works like this, it would have been good for The AI Scientist to cite them"

AI Scientist team on related works

The episode underlines a shift in how science is done and who is credited when machines assist or lead discovery. There is no agreed rule for idea attribution, which makes it easy for a useful approach to drift into a contested claim. Researchers warn that relying on AI to generate novel ideas without clear citations could erode trust in the scholarly record.

As AI tools remix existing knowledge, the challenge is not just about copying words but about tracing the lineage of ideas. This fuels a push for new norms that require disclosing AI inputs and citing related work even when the source is not text. Without these norms, the pace of AI driven science could outstrip our ability to trace influence and credit.

Highlights

- Ideas remix, authorship demands a new map.

- Credit is the ballast for trust in AI research.

- Novelty cannot be guaranteed by a machine alone.

- There’s no single way to prove idea plagiarism.

Risk of idea plagiarism in AI research

The use of AI to generate research ideas and papers raises concerns over attribution and credibility. Without clear standards, funding bodies and publishers may face backlash or controversy as claims of overlap or non attribution emerge.

The rules of credit in science may shift as AI becomes more embedded in research

Enjoyed this? Let your friends know!

Related News

Commodore acquisition finalized

New study challenges authenticity of Shroud of Turin

Nick Jonas Bedroom Confession Sparks Debate

AI mimics human writing style in alarming ways

Tomb Raider remaster under AI voice scrutiny

Dhanush criticizes AI ending for Raanjhanaa re-release

NASA plans to study interstellar object with Juno spacecraft

Study Reveals Organized Scientific Fraud