T4K3.news

AI impersonation hits musicians with fake song releases

AI generated tracks under a living artist's name surface on major platforms, raising questions about consent and platform safeguards.

Artists confront a new form of fraud as impostors release AI generated songs under their name.

AI impersonation hits musicians with fake song releases

Emily Portman, an award winning folk singer from Sheffield, learned of a release called Orca that appeared on Spotify and iTunes after a fan sent a compliment. Portman had not released a new album. The 10 track album carried titles that could resemble her choices, such as Sprig of Thyme and Silent Hearth, and the credits listed Portman. The voice on the tracks sounded like her, but was off in places, and the overall style matched a folk sound that AI could produce. The release spread online, confusing fans who heard it as a new Portman project.

The incident points to a broader problem in the music industry: AI can imitate a real artist without consent, and platforms may struggle to verify authenticity. Portman describes the experience as unsettling, and she is not alone in facing this risk as more artists share stories of impostor releases. The episode raises questions about how songs attributed to a living musician can appear without authorization and what platforms should do to prevent this kind of impersonation.

Key Takeaways

"English folk music is in good hands"

A fan message sent Portman a compliment before she discovered the fake release

"I had not released a new album"

Portman explains why the release felt suspicious

"It was called Orca and clearly AI generated but trained on me"

Description of the fake album

"The voice on the track was close to mine but off in places"

Assessment of the AI generated voice

The case reveals a critical tension between creative innovation and artists' control over their identity. As AI voices become more convincing, there is a growing risk of reputational harm and lost revenue when music is released in an artist name without consent. Platforms must decide how to verify ownership and provide clear provenance for tracks, while regulators may consider new rules for licensing, consent, and takedown processes. For artists, the episode underscores the need for clear rights management and rapid response tools to protect their identity online. It also signals that listeners will increasingly need to verify releases, not just listen passively, in the AI era of music.

Highlights

- AI can imitate a voice but cannot imitate a career

- My name should not be used to fool fans

- This feels like identity theft set to music

- Fans deserve proof a track is real and authorized

AI voice impersonation risks for artists

The incident highlights a broader risk of AI voice cloning used to release music under an artist's name without consent. This can harm reputations, violate intellectual property rights, and confuse fans.

Guardrails for AI in art are taking shape as the industry learns to balance innovation with protection.

Enjoyed this? Let your friends know!

Related News

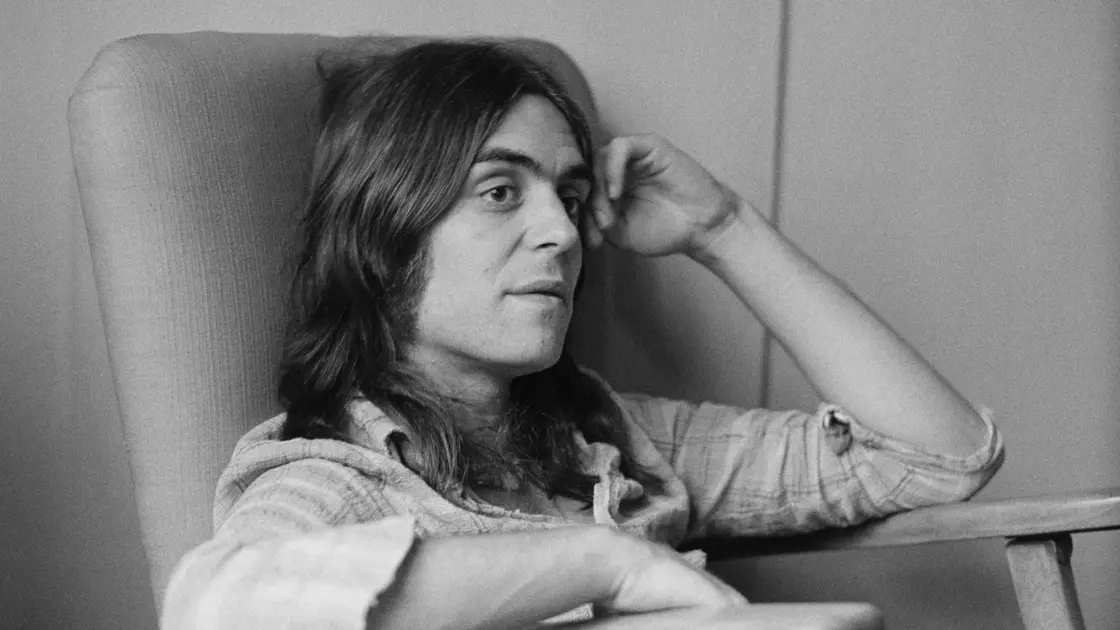

Mac DeMarco on AI and human craft

AI music creator signs first label contract

Cybersecurity Weekly Recap

TikTok users create AI-inspired content for attention

Chuck Mangione, Jazz Great, Passes Away

Buckingham Nicks Remaster Arrives September 19

Gamescom 2025 highlights summary

Disinformation Storm Hits US News Outlets